To support adaptive management, Magaldi et al. have developed a deep-learning model to analyse ground-level camera traps in African tropical forests.

A familiar problem

If you work in wildlife research or protected-area management, you’ll know the feeling: camera traps are brilliant at “being there” 24/7 in dense forest, but they come with a hidden cost—an avalanche of photos and videos that someone has to sort, label and check.

In African rainforests, that workload can be especially intense. Animals can be partially hidden, moving fast, or recorded at night. And teams are often operating with limited time, staff and computing power. All of that means valuable camera-trap data can sit unanalysed for months—too slow for day-to-day management decisions.

That’s the gap we set out to address.

What we built

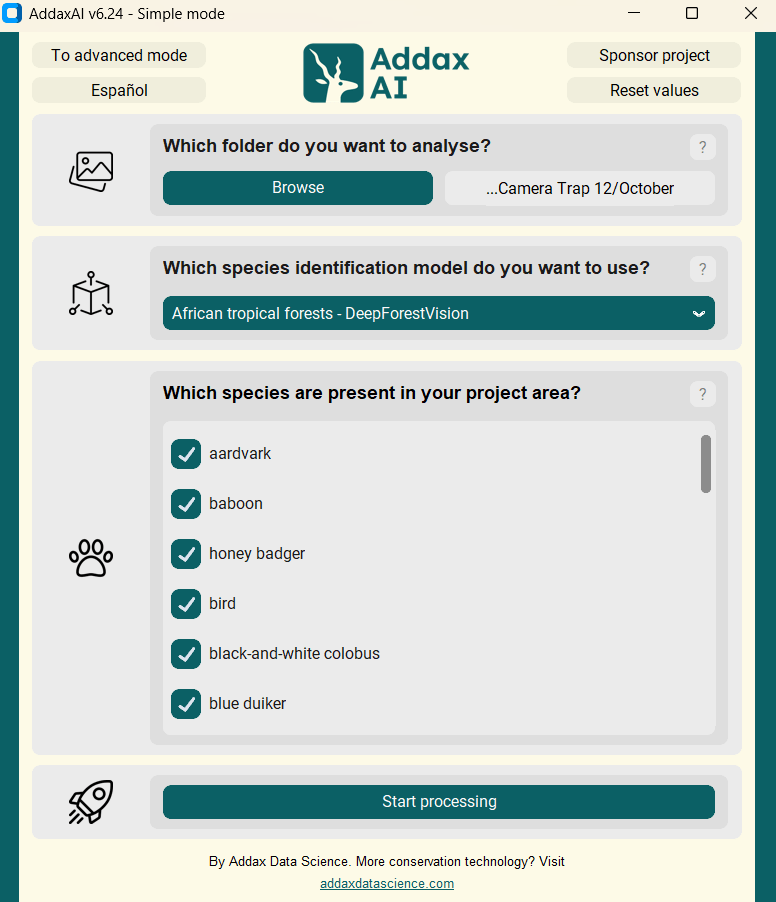

With an international team of researchers across Europe and the Congo Basin, we developed DeepForestVision, a deep-learning model designed specifically for ground-level camera traps in African tropical forests, and the only available tool that can process both photos and videos in the field.

Forest camera-trap footage includes challenging lighting, partial views, fast movement, and a huge range of camera settings. Building a tool that can cope with that diversity required training data from many habitats: we assembled (to our knowledge) one of the largest labelled camera-trap datasets for these habitats: over 2.7 million photos and 220,000 videos, collected from 63 research sites across 11 African countries.

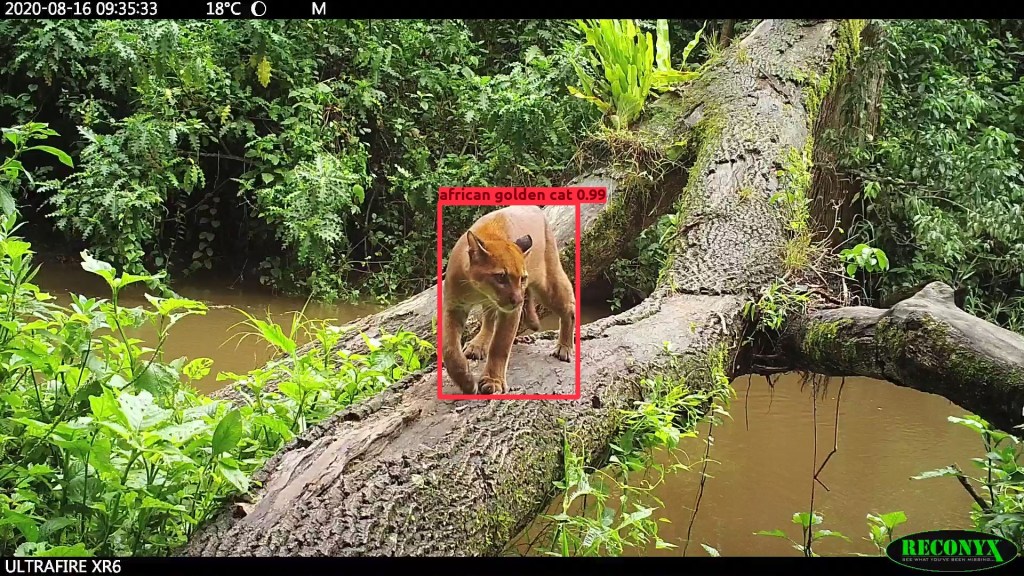

DeepForestVision can recognise 33 non-human vertebrate taxa (mostly mammals), and it also flags humans, vehicles, and blank images—a crucial feature when blanks make up large share of field datasets. It uses a two-step approach: 1) it detects whether an animal (or a person/vehicle) is present and crops the relevant part of the image 2) it classifies the cropped animal.

We evaluated DeepForestVision on two very different real-world datasets: ~15,000 videos from Kibale National Park and ~700,000 photos from Lopé National Park, Gabon. It reached 87.7% and 98.9% accuracy respectively, outperforming the other existing options by 13.1% to 45%.

A key aim was accessibility. DeepForestVision is freely available through the AddaxAI interface that can run offline and doesn’t require programming skills.

Why this matters for management

For conservation teams, speed and reliability matter because decisions are often time-sensitive. Quicker turnaround from raw footage to species lists and activity patterns supports adaptive management, for example, responding to seasonal shifts or emerging threats. Instead of manually screening everything, teams can focus human effort on verifying key records (rare species, priority zones, uncertain classifications). Automatically flagging human detections can also support patrol planning and help evaluate pressure inside and outside protected areas.

Because DeepForestVision is robust to different camera-trap protocols and simple to deploy in low-resource conditions, it fits the realities of research and conservation programmes and supports biodiversity monitoring across African rainforests over space and time.

Yet, no automated system is perfect, particularly in rainforests. For instance fast-moving small animals at night (like rodents and squirrels) can be missed if detection fails. That’s why we see DeepForestVision as a decision-support tool handling the bulk of the work at scale, while people remain essential for validation – especially for rare or high-stakes records.

This is a Plain Language Summary discussing a recently-published article in Ecological Solutions and Evidence. Read the full article here.